Scaling Data Engineering — Data Quality Tech Debt

Scaling Data Engineering can not be done without addressing Quality and Trust issues due to gaps in Lineage and ripple effect of changes

Any conversation around scaling Data Engineering to support ever growing data volumes and have a close partnership with Business hinges on quality and trust of data across all touch points in the pipeline. As the pipelines increase in volume and complexity, they lose flexibility and scalability with inevitable quality tech debt increase. The goal or mantra of Data Engineering discipline as the evolution curve matures, is not to write monolithic pipeline code for every use case but to engineer frameworks and tooling that are built on principles such as DRY, Single Responsibility, Composability, Separation of Concerns, Templates, Factories and more to be able to serve the elasticity and scalability and quality constraints in today’s world.

In this article, I will outline some of the problems in scaling the pipelines taking an example use case and bring out the key design criteria. We will then look at some of the emerging architectures and solutions that allow us to start moving towards addressing scale and quality issues.

Use Case — The Story of Marketing 360

In a nutshell, this is the unification of Marketing data across all touch points in the customer journey. It is applicable across any industry or organization that serves customers. At a high level, this involves ETL (Extract Transform Load) and/or ELT (Extract Load Transform) combination of diverse sources (online behavior, offline behavior, transaction behavior, marketing spend, campaign behavior, social behavior etc). This may start out with core requirements around online, offline and marketing sources that would get mapped to probably couple of pipelines.

However, as business changes and data needs evolve there is a constant stream of changes to sources that ripples across pipelines including new pipelines that may be getting created. The changes include data attributes, schema, business rules, privacy, contracts and more. The impact is across various layers in pipelines with end result being brittleness and low testability due to complex interdependencies.

While the key design factors leading to Tech Debt that translates to Quality, Scale issues, while this is a large universe I would like to highlight the below:

Data Lineage, Schema, Data Attributes, Business Rules. In the following section, let’s take a look at some of the emerging architectures and tooling that tackles these issues.

Design Principles and Emerging Frameworks

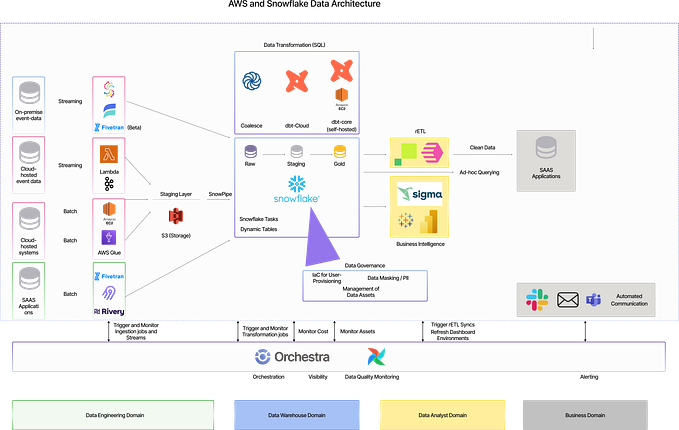

In the Data Engineering world, principles such as DRY (Do Not Repeat Yourself), SRP (Single Responsibility Principle), Composability, Template are the foundational elements that allow constructs such as Data Lineage, Schema evolution, Business rule changes etc. The Big Data ecosystem is evolving rapidly with PaaS, IaaS, SaaS players and all combinations of these that offer their flavor of solutions for this quality, scale issue. In this article, I would like to highlight couple of solutions that came into existence as a result of Quality and Testing challenges and demonstrate the key design principles in their usage constructs. Great Expectations and DBT, these are both available as open source packages with increasing adoption in customer community along with integrations and 3rd party solutions across the spectrum of providers, partners

Great Expectations

From the Great Expectations blog “Great Expectations helps data teams eliminate pipeline debt, through data testing, documentation, and profiling”

The framework is config driven, template driven with combination of api’s and cli that allows highly flexible usage. The end result is documented data schema, attributes in the form of expectations that can be executed against configurable execution engine.

The sweet spot where framework like Great Expectation fits like a glove is the Extract and Load parts of the pipeline that are directly related to raw or source data attributes and associated loading rules. The expectations are a good mechanism to understand schema and means to understand lineage.

DBT (Data Build Tool)

From getdbt.com “dbt applies the principles of software engineering to analytics code, an approach that dramatically increases your leverage as a data analyst”. The essence of dbt is sql models that are the business logic behind the transforms to land the data in whatever data warehouse or in a sql engine like Presto for consumption by BI layer

Here’s an example query that creates and populates Table C from Table A and Table B. When compiled to executable SQL, dbt will replace the model specified in the ref function with the relation name. Most importantly, dbt uses the ref function to determine the sequence in which to execute the models, in essence building the dependency graph and providing the Lineage

# materialization defined at a model level

{{ config(materialized="table") }}

SELECT

*

FROM

{{ref("tableA")}} A

left JOIN

{{ref("tableB")}} B

ON

A._key = B._keyIn dbt, you can combine SQL with Jinja, a templating language. Using Jinja turns your dbt project into a programming environment for SQL, giving you the ability to do things that aren’t normally possible in SQL. For example, abstract snippets of SQL into reusable macros. Macros can be invoked like functions from models.This makes it possible to re-use SQL between models thus allowing one time definition of common business logic in keeping with the DRY design principle. As an example:

{% macro fahrenheit_to_celsius(column_name, precision=2) %}

(({{ column_name }}-32)*5/9)::numeric(16, {{ precision }})

{% endmacro %}

This macro may be used in a model like this:

select id as location_id, {{ fahrenheit_to_celsius(‘temp_fahrenheit’) }} as temp_celsius,… from location_data.weather

Enabling Data Lineage and DRY

The two frameworks above operate in the Extract-Load-Transform phase of the pipeline, providing mechanism to check Lineage and to evolve business rules. This provides visibility into any schema dependencies as well as business rules impacts when requirement changes need to be reviewed and implemented. The end result is ability to respond quickly and make changes in an agile way thus allowing pipelines to scale

In Closing

As the demand for data sky rockets, so are the expectations in terms of speed and scale of processing end to end with quality and trust as observable, monitorable elements. Next generation of pipelines have to consider the design and architecture elements that would fulfill these expectations. We are seeing the evolution of data engineering in these aspects and this will lead to very interesting outcomes, maybe new frontiers with cloud, ai paradigms melding with data engineering. It’s going to be an interesting journey!